Since launching this book in 2017, I've had this conversion project on my to do list. If you're new to me or the book, you're in for a ride. At the start of writing this I was a digital advertising leader, by the end I burned out of the industry. Nowadays I'm publishing how-to guides for networking equipment. The juice was worth the squeeze. I'm making this available for free so you might gain some direction or perspective about the world we find ourselves surviving. The themes covered in this book underpins many of the earth-shaking events that increasingly take place online. May yours be a "good one".

Best,

Corey

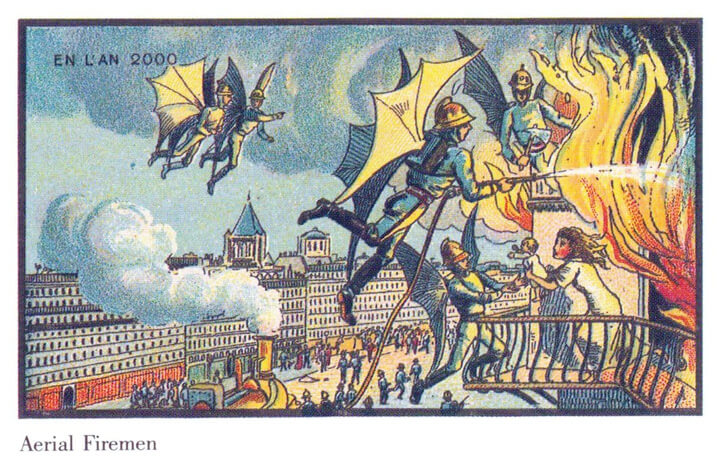

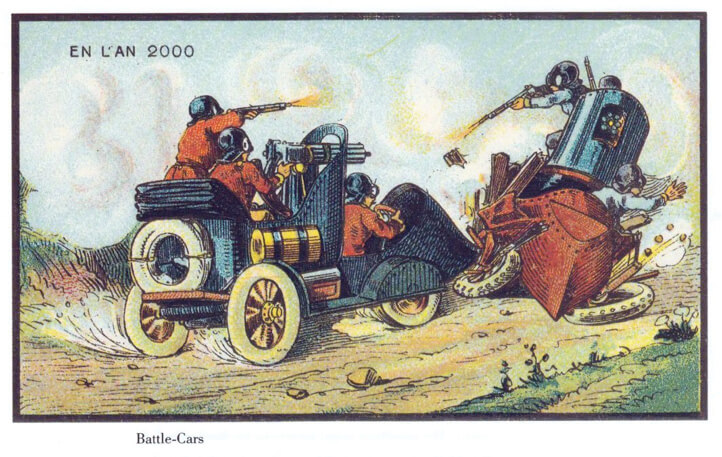

Welcome to the future! I’m glad you’ve made it, here’s what you need to know: We haven’t yet gotten around to inventing flying cars, futuristic metallic clothing, or shark-sized interactive 3D holograms.

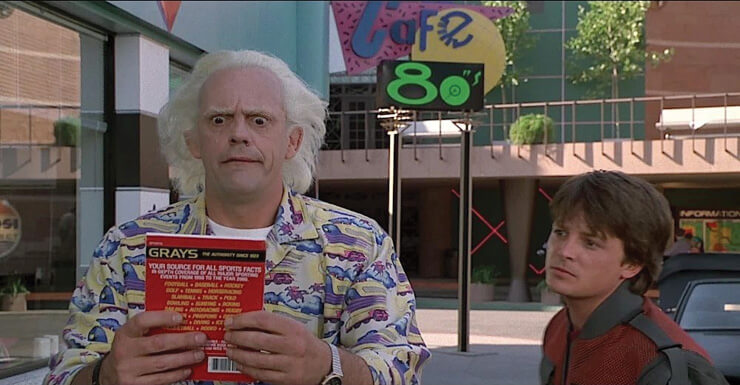

The first time any of us saw the film Back to the Future 2, it was a jaw-dropping vision of the future. Well, our presumed future anyway. Marty McFly zipped through space and time to 2015 and was granted a grand exhibition to an incredible new world funded through new and exciting technologies. Audiences across the world were sold hook, line, and sinker. We banked on the notion that in the future we’d all be riding around on hoverboards and eating healthy meals cooked in a matter of seconds. While the film portrayed a veritable World’s Fair of what’s next in technology, if you look around ‘the future’ isn’t always readily visible. In regards to everyone wearing shiny metallic clothing, perhaps we’re just not there yet. However, as we’ll find out together, in a great many ways our future turned out to be much more exciting than we could have imagined.

The intent of this book is to help drive technical literacy of everyone who is curious. We are here to de-mystify the tech world of today as well as illuminate where we may be tomorrow. While reading this book, you’ll notice that I make frequent use of the term ‘we.’ My use of ‘we’ is shorthand for the you and I, as well as stakeholders in the technology at hand. To deliver this message in a manner that’s comfortable, wherever possible I aim to write as if you and I were sitting in the same room having a one-on-one conversation. We’ll peel back the curtain of technology to reveal the good, the bad, and the awesome. We’ll achieve this goal by building up core concepts as a primer before they become applicable, in turn, providing insight to the technological and scientific developments we’ll review. We all have a collective stake in understanding their ramifications and place in our lives.

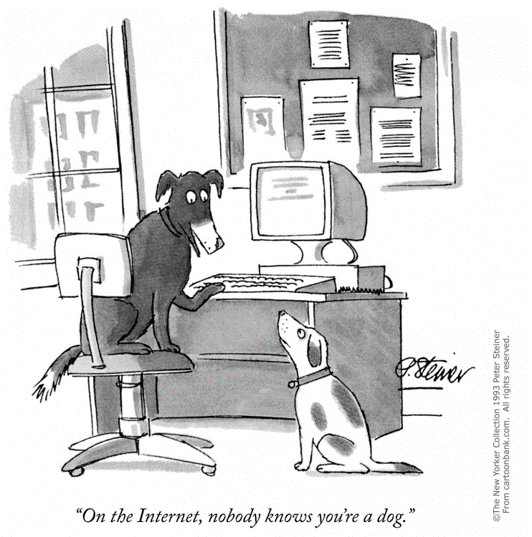

If society accepts technology as a component of our culture, our societies will benefit from the value gained by having an informed citizenry. Throughout this book, we’ll collect and examine evidence, building a case for an overall optimistic view of technology, albeit with caveats. Once we accept technology’s place in society, we are enabled to examine how these advances will affect our day-to-day lives. This book will be an optimistic and educational primer on the amazing developments that are happening today in science and technology; meanwhile we’ll acknowledge the risks presented by these new developments, and the potential options for reducing or dampening negative effects. Important to note, when we’re speaking about technology, we’ll be referring to it without the taxonomic convention, inclusive all types - bio-, agriculture, banking technology, nanotechnology, media, military, travel, even waste technology. Technology is pervasive and foundational throughout our entire lives. I’m saying that the separation between animals and us is that we use technology enabled by our opposable thumbs. There is them and us, and dogs and cats are along for the ride too apparently. Our entire families are going through this ride, great Scott! Let’s do what we can to make sure it’s a good one.

Technology advances from the practical application of scientific principles for the improvement of societal tools. For prehistoric humans, the evolutionary development of conscious perception was provided by expanded ‘cognitive ability,’ over generations and generations. Cognitive ability refers to the brain’s ability to tackle tasks ranging from complex to simple. The senses themselves developed into the eyes and ears we know and love today. Through generational and genetic variations, people developed differently or with minor defects, an example might be color blindness. As brain size and function increased and developed, prehistoric humans were able to observe and reflect upon the world around them. Enabling them to shape the environment to their collective benefit. This began with the use of rocks to smash objects or animals. Early humans later observed the flaking nature of rocks when smashed against each other, and used it to develop sharp edges. Perhaps the first true forms of technology, sharpened rocks, certainly proved a useful tool in the dim, harsh environments that pervade prehistory. Later, tools included innovations like using fire to harden the tips of wooden sticks. This allowed prehistoric humans to churn the soil efficiently to yield more productive crops.

It’s not hard to imagine the dual use of these fire-hardened sticks in this very violent period in our history. Life was brutal and short. However, it turned out that poking both the ground and other animals proved to be an intuitive use of technology. No training needed, just point it in the direction of the ground or an animal, and push. Lucky for us, poking animals with sticks was especially good for business. You poke a rabbit with a stick and all of the sudden you can eat it! It must have been a revelation for early humans. Neanderthals and Homo sapiens must have felt what Americans feel like when they enter Costco, surrounded by beautifully expansive consumerism! You see something over there that’s furry, smells delicious, and has four legs - let’s eat it! Eventually we figured out which animals made for better friend than BBQ, and kidnapped ourselves some pets. Of course nowadays, if you poke someone, you’ll just get unfriended on Facebook.

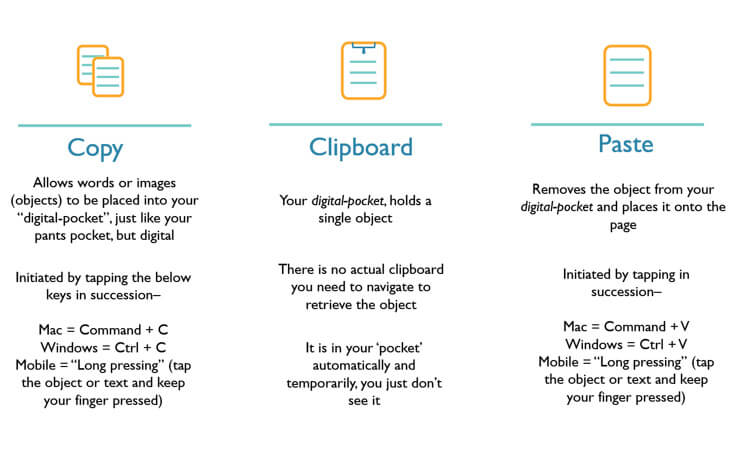

Hunting with fire-hardened pointed sticks provided more fat laden meat which was a driving factor in increased cognition. Over time, the body began to devote more and more energy to the brain, especially when compared to other primates. Primates on the other hand, evolved to devote more metabolic energy toward muscle use,1 which is why a chimp can beat Arnold Schwarzenegger2 at arm wrestling. While other primates were continuing to be the ‘jocks’ at high school, us humans were relegated to the ‘nerd’ table. We’ve been working on our social skills ever since. Meanwhile our brain went on to consume 20% of our body’s total energy,3 a great investment I’d wager. Our enhanced cognition enabled increasingly complex social hierarchies via language. This unlocked cooperation among non-kin early humans. As stated by Steven Pinker, a noted cognitive scientist, “Language not only lowers the cost of acquiring a complex skill but multiplies the benefit. The knowledge not only can be exploited to manipulate the environment, but it can be shared with kin and other cooperators.”4 Pinker goes on to assert that this is because information can be duplicated without loss. If I tell you that banging these types of rocks together sparks a fire, then I haven’t lost that knowledge. Food for thought, this same principle applies to information contained on devices across the world, you’re always a copy > paste away from recreating text or data without loss. The same principle applies to tools built via software like Microsoft Office Suite, Adobe’s suite of media tools, etc.

What about the tools we have now? How do they further extend our perception of the world around us? The tools we have at our disposal now are far different and less intuitive than a fire-hardened pointed stick. The culmination of generations of scientific advancement have given rise to technologies that affect every aspect of our lives. The question for technologists, designers, and engineers becomes how do we create technology driven solutions to today’s challenges? Further, how do we ensure that we create intuitive solutions for the intended stakeholders. These stakeholders may consist of large swathes of people who will have vague ideas of how the technology itself works, or it may be a small community with a unique problem. It is the goal of this book to bring the stakeholders to the table, because increased understanding of the tech world allows us to first, clearly define our issues and needs, and then create technical solutions that meet those needs. Your inclusion in this process will help yield a tomorrow that works best for everyone.

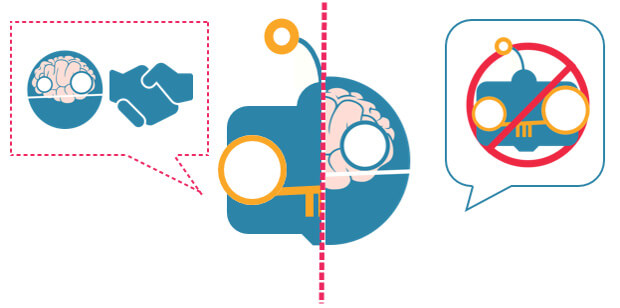

My concern with people not understanding technology stems from how technology is portrayed in films, television shows, and most importantly, the news. Few people understand web technology, let alone computer science like machine learning. In the absence of understanding, hyperbole often wins out over reasoning and critical thinking. Terminator 2: Judgement Day painted a bleak future where humans are oppressed by autonomous killing machines, The Matrix trilogy painted a similar vision. There’s virtually no end to the examples of where technology could and does go wrong in pop culture. These films are examples of the instinctive fear of technology’s possible outcomes. Outcomes that appear assured given the humane character portrayals and vivid images on the screen. If you were to play word association with most people, as soon as you mention ‘robots’ they’re likely going to go with a word that has a negative connotation. Robots overtaking humans as the dominant species on earth while possible, is improbable. The issue is that this mode of thinking becomes the default lens of viewing technology.

There are not enough voices explaining both the promise and risk of advancing technology. My fear is that given how technology has been portrayed in pop culture and media, our brains have been given shoddy mental shortcuts to think about technology. This arrives in the form of heuristics, enabling quick decisions about situations in which there may be limited or incomplete information. Processing all available information about any topic, technology or otherwise, is impractical to say the least. Heuristics then speed up the response by allowing mental leaps, easing the cognitive load, and energy requirements, on our brains. As a result, if you were to approach people on the street about their smartphone’s functionality, they’re likely to describe it in fantastical, almost magic-like terms, rather than the structured, prescribed protocols that they are. If culture deems technology as magic, we’ll never fully understand it, and its place in our lives. To realize technology’s potential is to elevate prosperity for all. At the very least, the good news is that the below five very odd behaviors are increasingly rare, if not extinct, thanks to the proliferation of technology:

1. Remembering all of your friend’s complete phone numbers

2. Slapping a television in hopes of reducing visual distortion

3. Using house furniture as a preservation of family wealth

4. Having ice delivered to your home to refrigerate food

5. Having no less than five remotes per TV

On the flip side, here are five behaviors that exist now that would seem insane to the people from the first five:

1. Order an item online to be delivered an hour later

2. Invite strangers from the internet to stay in your home for a fee

3. Getting in cars with strangers to get to your destination

4. Video chatting with your doctor

5. Using devices that translates both spoken and written language in real time

In the grand scale of humanity, all ten of the above behaviors might seem peculiar to earlier generations because these changing behaviors are a vital component of human social development. They are a part of the ephemera of human culture, each of them representing a moment in time. Like the TV Guide, their only purpose today is to recall how it all used to be. We can recall the environment in which these cultural touchstones occurred for each of us. For kids of the 90s blowing air into a Nintendo cartridge like a harmonica was just something you did in hopes of playing some video games. Our brains are hardwired to recognize patterns in everything we perceive - behaviors like this developed out of conditioning. We blew into the cartridge and popped it back in, the feedback of whether or not it worked was immediate, and if it failed, we could repeat it quickly. Our brains only needed one success to declare cartridge-blowing as a valid method of troubleshooting.

In the most famous example of classical conditioning, Pavlov’s dogs, Pavlov the scientist rang a bell to initiate a dog’s salivating for its impending meal. The dog’s brain recognized the stimuli of the bell as a precursor to being fed, so the reaction of salivating became natural and involuntary. Thus, every time the bell rings, the dog thinks food is on its way. This psychological phenomenon also applies to humans, we use past learnings to inform future behavior.

If people do not understand the technology behind our devices, we’ll mistakenly salivate at the ‘ringing of every bell.’ To be fair, I’ve blown air into more than my fair share of Nintendo cartridges. That’s why I’m here, I want to help you recognize the patterns underlying the technology and to connect the dots. I’ve watched the internet grow up from AOL community chat rooms and into an exciting multi-trillion dollar driver of ingenuity. From 3D Printing to Artificial Intelligence to Augmented Reality, we’ll examine where these technologies are presently, as well as where they might be in the next five to ten years. Technology has advanced to the point that voodoo superstition will no longer get us by. Because the stakes are too high and too far reaching, a reframing of our decision making processes as they relate to technology are vital.

To achieve this we’ll make use of textbook-style tangential breakaways where we sidestep the topic at hand to review the critical concepts at play.

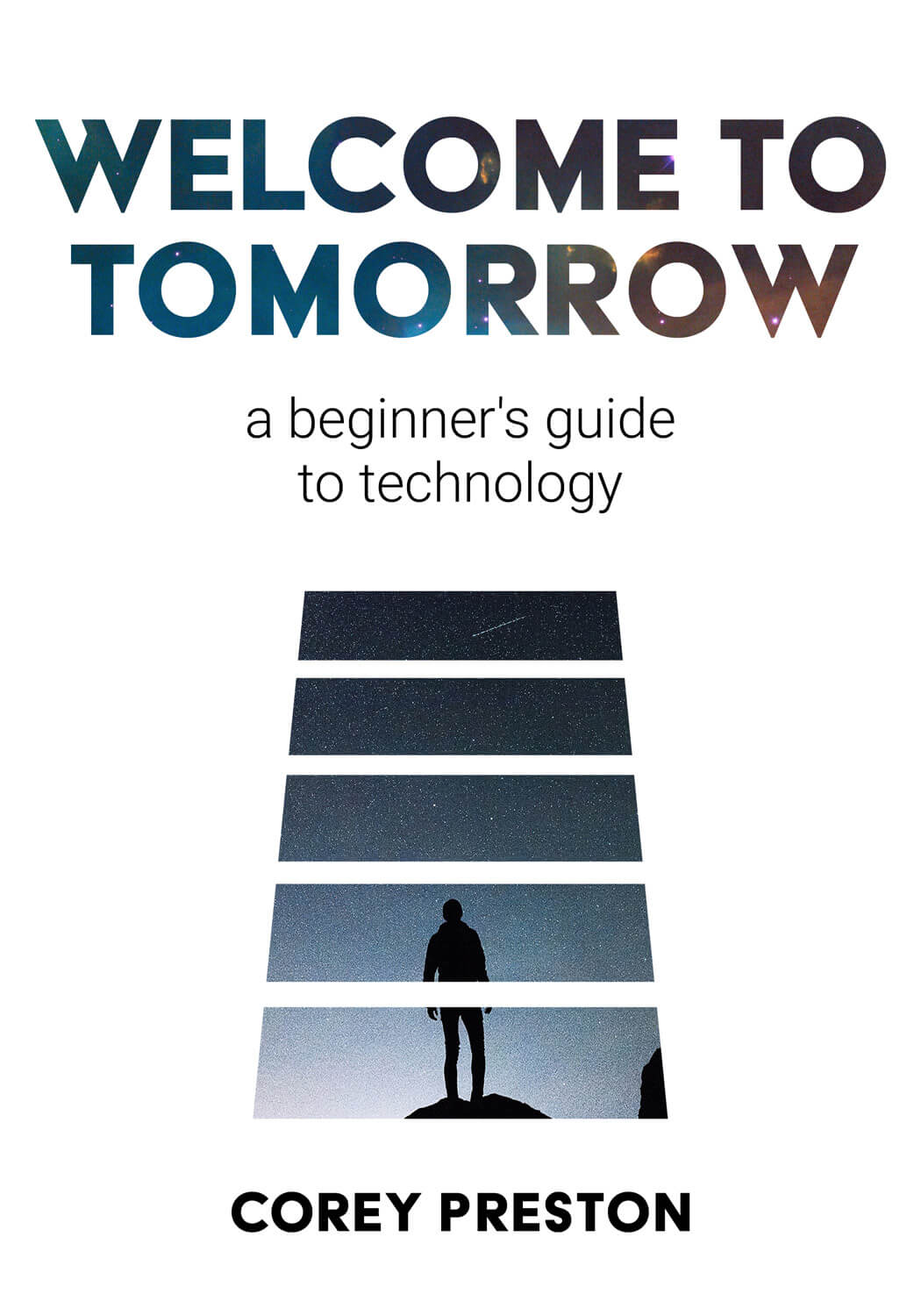

My aim is to approach the material in a conversational manner that is as accessible as possible. In recent years, I’ve noticed a largely unaddressed gap in educational content aimed at helping mainstream America understand technology. I’m writing this book for the people who feel that technology moves too fast for them to keep up with it, for the people who feel like they’re missing a train that so many have already hopped aboard. Scientists and researchers can frequently be found on TED Talks or YouTube giving fantastic lectures that are seemingly aimed at fellow scientists and researchers. As an outsider, understanding technological concepts can be confusing or even daunting. Much like an introductory 101 college course, here you need only to bring your curiosity of the tech world around us to gain a firmer understanding of the basics. We’ll add jokes to moisten dry material, and visual aids will be added to illustrate where words fail.

To be clear, the material we’ll be covering assumes you have a base level of knowledge about how technology works. From there, we’ll build up the concepts and terminology together, and by the end you should feel empowered with understanding of how the world spins nowadays.

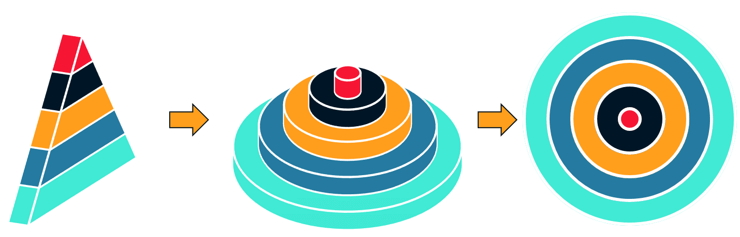

Fig 1-1. - Spectrum of computer science knowledge, not to scale.

If you happen to be an engineer, this might all read like yesterday’s news to you. Furthermore, you’ll find that this book may lack some of the nuances that your expert knowledge affords. It is not my intention to explain concepts in a reductive manner, but we will simplify wherever possible. Generally speaking engineers are incredibly up to date on their geek news. My hat has always been off to you lot.

While you are reading this book, I’d encourage you to keep your favorite device or laptop nearby to check out some of the topics we cover in a live setting. You may find yourself thinking something sounds interesting enough to check out right then, only to find the device is in the other room. Let’s do our best to keep first-world problems in check by keeping those devices handy!

Buckling into this crazy ride opens with an examination of how our senses assist our understanding, we’ll illuminate the spectrum of technology and its many forms. Throughout we’ll spotlight both the good, the bad, the risk and opportunity therein. This book’s purpose is to include any inquisitive mind who desires a peek under the tent of the three-ring circus that defines the world of technology.

At the core of every innovation since the dawn of man has been an intellectual curiosity, a human desire to understand the inner machinations of how our world works. In terms of civil and economic prosperity, this growth has been driven by the women and men dedicating their lives to research in many fields of study. In exploring unknown horizons, we continue to find out new things about ourselves and our place in the universe. Like steps in time, these advances allow us to stand on the shoulders of the generations that came before us.

Roaming groups of nomads assembled into tribes, establishing rules and hierarchies of society. Later those tribes would go on to become nation-states, create more social structure and law for the express purpose of the prosperity of individuals. Eventually, whole nations of people arose, and with it, access to technologies and the knowledge. Passed on knowledge of how to improve their skills brought order to disorder. People assembled into groups based on these skills commonly referred to as guilds, sharing their knowledge and insight. Eventually this led to incredibly intricate machines with hundreds of moving parts, literally and figuratively, as skill sets joined complimentary skill sets and advancement arose. This is all because thousands upon thousands of years ago, a short, hairy guy or gal creakily got out of bed, possibly hungover, and felt like phoning in their day by doing less with more.

As years and generations passed, understanding of our world around us progressed. That same fire-hardened stick we discussed earlier would go on to specialize its purpose by evolving into tools like a hoe, with these innovations societies created an even more bountiful harvest. Through a further application of innovation, the hoe evolved into the plow and thusly supported a society of yet more humans. As quaint as it may seem to now think, the simple hoe was a revolutionary tool. It created agrarian understanding which allowed early humans to increase in population and social complexity at rates never before seen.

The technology of today enables levels of productivity that would shock previous generations, and the use of which would absolutely terrify our short, hairy prehistoric ancestors. To fully unleash the impact of technology requires the use of levers that are not immediately apparent during the use of things like mobile devices or apps. Let’s dive into discussing foundational concepts that establish a baseline for understanding and leveraging technology.

Hardware - physical components, motherboards, computer chips, etc... that by themselves perform nothing but contain the potential to operate. Think of your muscles.

Software - digital instructions for hardware, from displaying an image to playing a video. Think of both your passive and active thoughts. Your lungs keep pumping air though you do not actively command them to.

The connection between hardware and software is the language they use to communicate between each other. In the below vocabulary review, we discuss the way information is communicated between them.

When thinking about the ways computers store information, it’s very easy to get overwhelmed. Between ‘gigabytes’ and ‘megabytes’ or ‘gigahertz’ and ‘megahertz,’ they do not make it easy. By the end of this vocabulary review you will feel more comfortable with seemingly abstract concepts. For now, let’s do some learning:

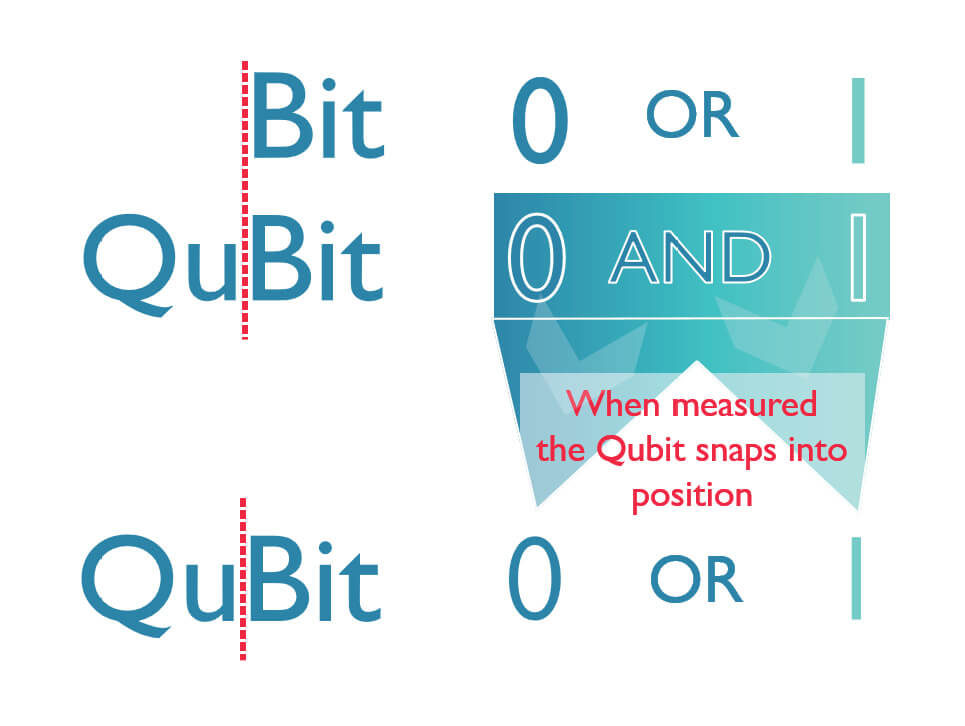

A Bit - a binary number (1 or a 0) is the most basic version of information a computer understands. If you could draw a comic book style thought bubble over a computer it would contain nothing but these bits as 1s and 0s.

Think of a bit as a single letter in the alphabet. Even a single letter can invoke meaning. For example, ‘I’ denotes me as an individual and is communicated by only a single letter. But generally, letters need to be paired with others letters to communicate effectively. The same holds true for bits.

Byte - A collection of 8 bits.

Now that we have a collection of 8 bits or letters, we can communicate actual concepts, like: FLAPJACK - F (1) - L (2) - A (3) - P (4) - J (5) - A (6) - C (7) - K (8)

Bonus point: the number of bits contained in a byte being 8 is as arbitrary as the direction of Donald Trump’s hair. True story.

Kilobyte - 1,000 bytes.

At 1,000 “characters” or letters, we can tell a mostly nonsensical story.

This is the amount of precisely one thousand characters, as you can tell this is much, much more than just a “FLAPJACK”. Pfffff. Yes ma’am (or sir), we are in prime flapjack cruising altitude. Nothing but clouds of powdered sugar atop mountains of (really quite beautiful this time of year) French Toast. I don’t know about you, but I had THE best blueberry pancakes yesterday, it was this great little spot in San Rafael, California called Theresa & Johnny’s Comfort Food. The pancakes... were a stack of two sunny-faced, plate sized rings of perfection. They came pre-buttered. “I can’t even”, as the ladies say. So, needless to say, it was a valiant attempt on my part. Nay, I was not the victor that day. Rather, I was the victor that night! Anyway, so here we are talking about bits and bytes. The point being that this is an order of magnitude more detail (characters) about a single concept. This is simply going from a byte to kilobyte. All of this from a simple one thousand characters. *mostly nonsensical

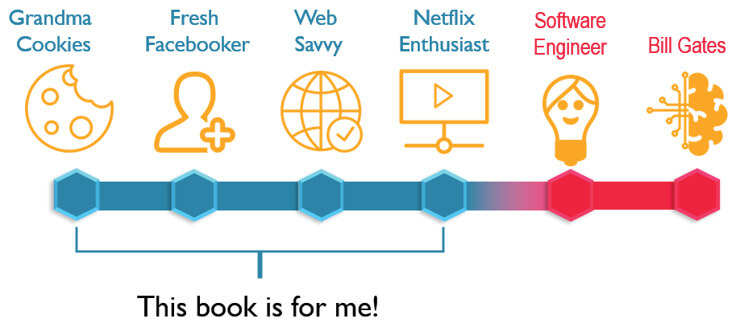

Megabyte - 1,000 Kilobytes

At the size of a whole megabyte (MB) of data/information, we have the ability to not

just talk about the pancakes, but to show a beautiful picture.

Fig 1-2. - Evidence of the existence of god. (Courtesy LivingJoyByZoe.com)

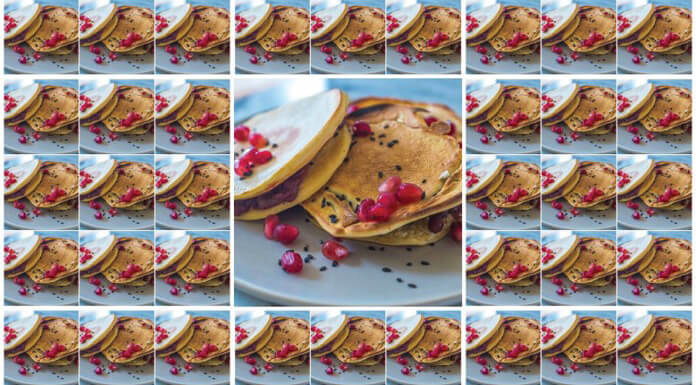

Gigabyte - 1,000 Megabytes

When we get into the gigabyte range, the amount of detail we can convey goes up quite significantly. You could now have thousands of images depicting the glory of flapjacks.

Fig 1-3. - Flapjackagram- coming to an App store near you.

The difference between a megabyte and a gigabyte being that we can now watch a video about making flapjacks at full HD.

Terabyte - 1,000 Gigabytes

With 1,000 gigabytes at your disposal in a storage drive, you’d be able to store a veritable vault of flapjack videos. Some people just fill it with pirated movies and TV shows, about flapjacks of course.

Protip: As you may have noticed, the prefix for storage capacity is the only element of the word that changes. Kilo- become mega-, becomes giga- etc. This continues to be true as the amount rises. Reaching all the way up to the yottabyte which has 24 zeros in it.

1 000 000 000 000 000 000 000 000 bytes

You may already be a digital girl, living in a digital world. If not though, let’s start with the basics:

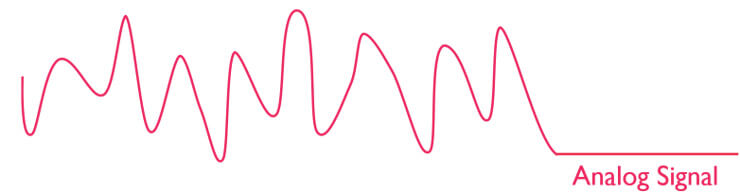

Analog - represents signals and measurements that exist in the physical world around us. Use a ruler to draw a 3 inch line on a piece of paper and you’ve conducted an analog measurement. Analog signals can be represented simply as a wave. They are always in the process of modulating up and down.

Fig 1-4. -The signal perpetually modulates up and down.

Digital Signal - In computing, the top of the waves represent ‘on’, while the valleys represent ‘off’. Signals and measurements within the digital world do not exist in our physical world. They’re either on or off. You could not put a ruler to a screen and measure the amount of data flowing across the screen.

Fig 1-5. -The signal is either on, or off.

Analog signals tend to be vulnerable to interference which can cause a signal to degrade or lose quality. If your memory goes as far back as tube TVs, you’ll remember what static or ‘snow’ looks like. The distortion may come from a hair dryer, an electric drill, or your neighborhood Doc Brown.

As digital signals are always just on or off, they are not subject to interference in the same way an analog signal would be. This means that no matter how much gold-plated crap they put on the connectors, the signal will still be a 1 or a 0. For everyday people it means this -

DO NOT spend a ton of money on expensive cables!

This is one of the biggest scams of the 2000s. If you visited a big box store, they would tell you otherwise, but they’re bamboozling you. Digital is digital, when’s the last time you had to slap an HD television to reduce interference?

Important to note - the official standard for cables like HDMI are updated periodically to reflect current needs in digital signal transmission. This means that when you upgrade your TV set in a few years, you may need to buy new cables if you want to remain with the latest and greatest tech.

In my experience there are many retailers on the web that sell reasonably priced cords and connectors. In the past I’ve used www.MonoPrice.com and have never had a problem.

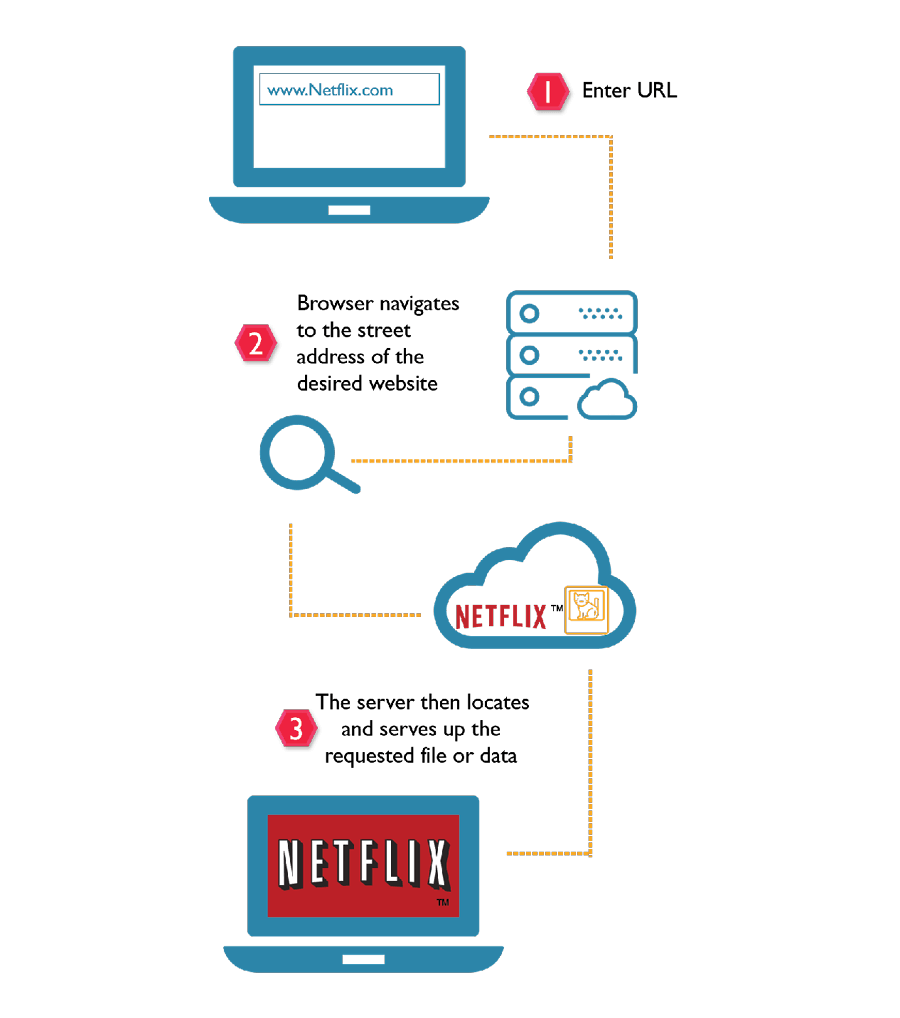

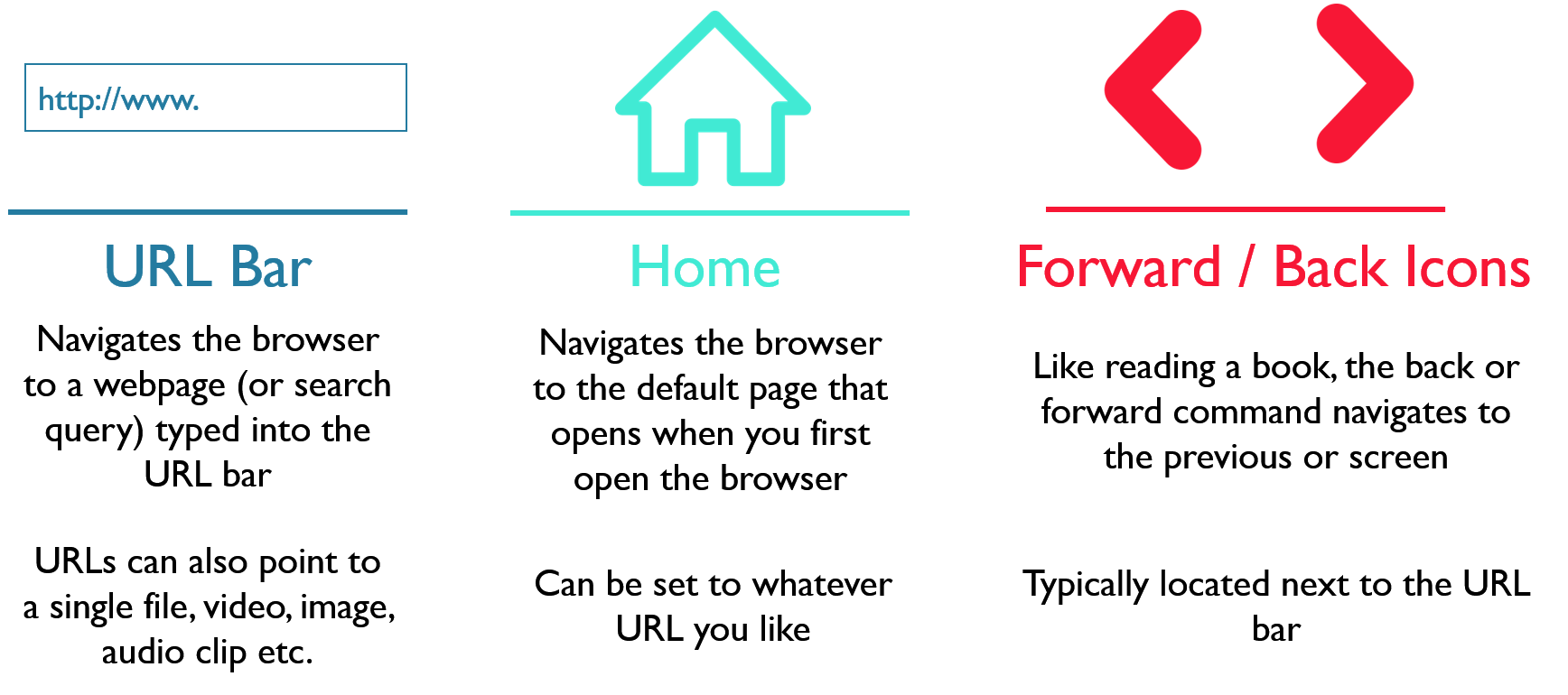

URL Bar - The ‘street address’ for websites, located near the top of your browser. The URL (Uniform Resource Locator) bar requires commands with a specific computer jargon, similar to spelling mnemonic to ‘I before E, except after C.’ the syntax ensures the browser navigates you to the place you expect.

Fig 1-6. - Don’t forget to bookmark your favorite sites in your browser!

Great! Now you know how to find and visit your favorite sites, via their street address. Facebook, Google, Yahoo are all websites that also exist at a specific and unique URL also known as a domain or specifically. They typically include a ‘.com’ as a suffix, but it can be ‘.anything.’

Like a business, each website has a ‘street address’ that when typed into the URL bar, and you hit enter on the keyboard, navigates the browser like a car, along the path and to your end destination. Much like a GPS the calculations for driving there happen without you noticing, it all happens ‘under the hood.’ Websites can also be a community, which allows people to come together for a common cause and solve problems. “I don’t have anyone to talk to about 17th century poetry” or “I need to show someone these cute faces of my grandchildren” - these are just a couple examples of the many problems being solved by the creation of online communities. These communities solve problems which then attracts other users with the same problem. If the solution is effective and simple to understand, it will naturally gain attention and users.

Anyone who is interested, is able to start their own website dedicated to whatever their passion is. Mark Zuckerberg did not need permission to start Facebook from some higher authority. He had a core problem - connecting with people. And so, he went on to make his own solution to his own problem. Many websites begin this way, the person has a challenge or issue and they create their own solution. Websites gain in popularity because other people across the web, also share in that same ‘problem.’ Given the nature of his problem of ‘connecting with people’ his solution also solved the problem of a great many people, billions in fact, allowing a global community of people to connect. It is because he solved such a common problem that people sought out his website to use for their own purpose.

Fig 1-7. - There are now websites dedicated to helping people build websites using templates as starting points. EX - Squarespace.com, Weebly.com

My point is that you too, can also start a site. It doesn’t matter what it is about, the important thing is to bring what you’re passionate about and connect with others. If you’re just entranced by the intricacies of knitting, then maybe you feel the need to talk with others about how much of a knee-slapping time you have with knitting. I’d just like to posit my own idea for the URL StraightOuttaYarn.com, the domain also known as a URL still available to plant your flag in!

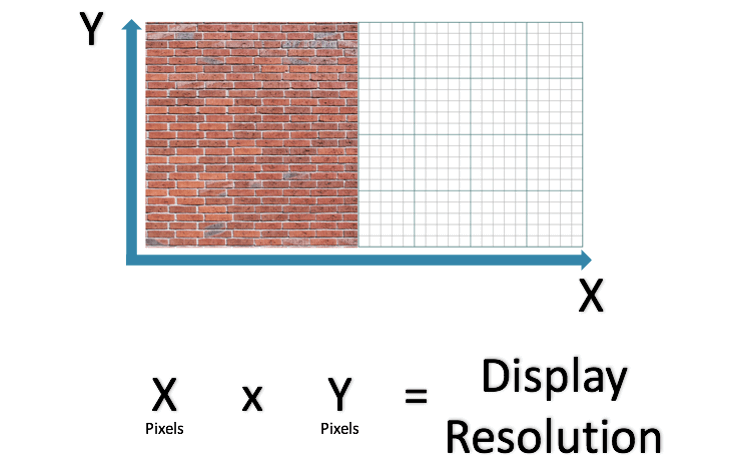

Pixel - Similar to a brick wall, pixels are stacked on top of each other to build a display. With pixels, each individual brick or pixel has the ability to change its color. When the pixels change color in unison they can produce an image, and if they work together and change quickly, they can produce digital video.

Unless you’re reading this on an actual book, you’re looking directly at a lot of pixels. You can actually hold a magnifying glass to the screen and see each individual pixel! They are measured exactly like a typical graph, with X (horizontal) and Y (vertical) axes. You could put a piece of graph paper up to the screen and achieve the same effect, just much smaller in size. When referring to a computer’s screen, the amount of pixels are combined to provide a more accurate representation of the display’s technical specifications.

1024 (pixels) x 768 (pixels)=786,432 total pixels

Fig 1-8. - The amount of pixels used in a device becomes a selling point for gadget enthusiasts.

As a frame of reference, my first computer had a maximum resolution of 640x480. Now my smartphone has a pixel resolution of 1,440 x 2,560!

Before we really dig into all of the rest, I thought it best to give you an idea of my background so you can understand my frame of reference on technologies. I hold no PHDs in computer science, I did not code a multi-million dollar Operating System by age 16. So, why me? Maybe you’ll have a little more fun hanging out with me instead. Over the years, I’ve built (sometimes with friends) several websites with varying degrees of success. Ranging from an open source asset library for indie game developers to a Minecraft gaming community. I’ve always translated personal pursuits into career learning. Parcel to this notion, I’ve built my career in advertising around helping people understand and get excited about advanced web technology.

Biographically speaking, I was born in West Germany, while my parents were both serving as military police in the United States Army. I’m the youngest of four sons, and grew up in very rural central New York state. When your hometown is not a major metropolitan area “Where are you from?” becomes an exercise in comparative local geography. In my case we were geographically located near the about 15 minutes away from the Baseball Hall of Fame in Cooperstown, NY. I speak the truth when I tell you that my hometown of Van Hornesville is not even truly a ‘town’ but a geographically separate hamlet within the town of Stark which has a bustling population of 767 people. Our rural hamlet subsists on agriculture and get-it-done-yourself spirit born from the secluded environment. It’s the kind of town where cashing your weekly paycheck and buying lotto tickets can be regarded as having a dream. We were very rural and very poor with 20%5 of the population living below the poverty line, a stat that was nearly equal to New York City.6 Still, I am reminded of my fondness for the area upon re-visiting and recalling this simple country quirk. Everyone waves to each other, whether they recognize you or not. The year I graduated from the public school (Pre-K - 12) there were a total of 13 students in my graduating class. There were only two New York state public schools smaller than ours, one of those being a state school for the deaf.

On warm weekends my extended family would get together with friends, my father and his brothers would play classic rock cover songs for hours while us kids ran wild. My father had taught himself to play guitar by ear, beginning with simple songs like Roy Orbison’s Pretty Woman, he built up his skills over time into covering rock legends like Stevie Ray Vaughan and Eric Clapton. He had leveraged his curiosity in guitars to independently learn at his own pace, and this type of learning has been the bedrock for my own expertise in technology. Unfortunately for my ego, my father’s talent for music proved an inheritable trait in appreciation only. I’ve attempted several instruments in my life and the only one that I ever proved ‘proficient’ in was the tambourine. You may have picked up there isn’t much glory out there for tambourine players. Luckily, I had skills elsewhere.

I’m fortunate in a great many ways. Among those good fortunes was being born to a father who had a keen interest in computers. This interest ensured that throughout the years and despite our modest blue collar means, our home would always have a respectably modern computer. When you’re a child, every experience is new and fascinating. None of us enter this world with a baseline for any singular concept. We start our early years fragile and dim, and over time and with good health, we experience the range and meaning in life. From the moment the lights upstairs officially flicked on for each of us, to the end of that line; there are of course, big events that tend to have a strong impact. Seismic shifts in our path in life hold a clarity of weight when viewed in retrospect. My own profound moment came from the unboxing of our new Packard Bell Navigator. The personal computer at this point was fast becoming the de facto learning tool in schools. Personal computing was a technology that set off a chain reaction of innovations that set off yet more explosions of groundbreaking technologies.

In my head, this was the undeniable wave of the future, a personal computer with a CD-ROM and a fully colored screen interface. This was my own version of A Christmas Story’s Ralphie getting his ‘official Red Ryder, carbine action, two-hundred shot range model air rifle!’ The best part was that I didn’t even realize I had desired it. This was the moment everything changed. The PC came bundled with Microsoft’s Encarta which enabled my young brain to satisfy my curiosity about the outside my small hilly corner of the world.

Fig 1-9. - A multimedia powerhouse and home of rad gaming classic MegaRace.

Windows 3.1 was my first graphical user interface or GUI, and it was a revelation. There were now icons that launched programs with a double-click via a mouse! No longer did you have to memorize a series of commands to access the floppy disk drive and launch a program. Not to mention the ability to multitask via switching between open programs by pressing alt + tab on the keyboard. It was a revolution in computing that even a hillbilly kid could understand. I had my first experience with creating multimedia at around the age of 9 with Macromedia’s Director program. As a precursor to Flash, I learned about keyframes and animation of simple objects, this allowed me to have an understanding of how interactive animations were created from that point forward.

Fig 1-10. - Bubbles and Spaceboots.

My first glimpse inside the case of a computer occurred when my brothers had surreptitiously convinced my father that we needed a $143 upgrade. We had convinced my father we needed an upgrade of 4 MB of memory (RAM). We’d claimed we could “do better at school work.” Little did he know that it was actually so we could play the game Doom II. We needed the upgrade, otherwise the gameplay stuttered as if we were watching a slideshow. A side benefit was leveraging the added memory to more quickly multi-task out of a game and into a calculator when my father walked into the room. I’d always had a love of video games, but this was the first time that I saw both computer hardware and a better gaming experience having a direct correlation to each other. That same 4 MB of memory now costs a single penny.

I considered the factors that go into a ‘positive gaming experience’ which in a technical sense often meant faster frames per second (FPS), which gives the appearance of smooth motion. Frames per second is a hotly debated topic in the gaming community, but the general consensus is that higher is better with diminishing rates of return as the FPS goes up past 60.

In my Junior and Senior years of high school I took the opportunity to attend a two year long vocational course on Information Technology. For context, this was the period after the DotCom bust, public skepticism about the computing revolution was at an all time high. Many people lost their shirt in the crash, requiring them to start over. For those who paid attention it was clear that there would not be a second bust like the first. The internet was clearly a tool and recognized as such by our generation. I began the repeating pattern in my life, obsessively immersing myself in new learning opportunities. Information Technology is the study and practical application of the flow of data between computers. Simply put - how computers talk to each other. The class was taught by one of the original IT guys Al Sarnacki, a Vietnam vet and all around great teacher. Very much a non-teacher persona, he conducted his class as a “Benevolent Despot” - which was a nice way of saying to us kids, ‘don’t piss me off.’ Not that anyone ever tested it, except for one kid, but that’s a different story. We began with learning various protocols and networking topologies and troubleshooting principles. By the end of the second year, we were programming industrial grade Cisco routers and improving grandmother morale everywhere via fixed computers.

It was truly a formative experience for me, up until then all of my technical training was either taught by brothers or self taught. It turned out to be one of the most valuable classes to my entire education as it laid the framework for my understanding of networks. From IP and MAC addresses to wiring standards for ethernet - we were given an understanding of the nuts and bolts of how computers communicate. We had a window into the way the internet as we knew it operates and many of those same mechanics hold true to this day. The pursuit of this knowledge, however, led to the unexpected side effect of becoming the local whiz kid that could fix any computer issue. “Hey, I fixed your computer issue - it was porn related.” It’s always porn related.

Important to understand is that fixing computers is never an inherently difficult task to perform. There are prescribed steps to take for everything and the internet has ensured that there is always a community for whatever you need to learn. Rather, fixing a computer is time-consuming. Between virus scans and drive reformats you can spend a few hours just getting a single computer back to a normal. Further education came from my school’s progressive technology education program where I learned how to code HTML. HTML otherwise known as Hyper Text Markup Language is the method by which browsers like Firefox or Internet Explorer draw images, text, and formatting to the web page. Imagine you were to pull the buttons, images, text away from the screen and place a flashlight to the side but facing the screen. You would essentially see pyramids of various sizes and shapes. This is sorta how object oriented programming works, each code segment displayed or run, is an object in digital space. The browser reads an HTML file that gets its directions from the code contained in the file. My first site prominently displayed yours truly - casually posing by laying across bleachers, frosted tips, puka shell necklace and all. These were not proud times for fashion, but really great for learning!

My attribute of being the local whiz kid transferred to college, which resulted in people showing up at my dorm room asking for computer help. After a lot of thought about my long term goals, I switched gears into marketing combining technology, psychology, and business. I chose this field because while having an aptitude for technology, I could not, for the life of me, understand people and sought to learn more.

As someone who is obsessively self taught, I leveraged this into teaching myself Adobe’s pivotal graphic design software Photoshop (CS1). I’d sit on my bed for hours on end, performing tutorial after tutorial. I’d taken no formal design classes so I was obsessed with sponging up every bit of knowledge or skill I could get my hands on. The internet was again an amazing resource for learning, containing hundreds of tutorials on all facets and functionalities of Photoshop. The tutorials, at first started from simple text effects and quickly led into compositing pieces and web design. I was learning to express myself visually rather than verbally.

Fig 1-11. - Sample of projects I was designing circa 2007

After discovering my affinity for visual design and graduating college, I landed a job at Burst Media. Burst Media was a display advertising network that had built one of the first ‘ad servers,’ or a computer that places an advertisement on a web page. Websites do this by placing code on their page that ‘calls’ the server for an ad to show. Despite the bad rap that exists to this day, digital advertising was fascinating from a networking and software standpoint. I learned how multiple servers rapidly and cooperatively decided on what ad to show a single set of eyeballs. These decisions happened in milliseconds and were happening millions and millions of times in a single day. The complexity of digital advertising in 2008 is now comparatively child’s play to what the ad tech community is producing today.

What may or may not be a surprise to some of you is that on a daily basis - companies both large and small are collecting data on you. Nearly everything you do in the digital realm is generating data in some form or another. Even the act of checking a notification from the lock screen on your smartphone can generate a data point. We’ll examine this phenomenon in much greater detail later in the book.

As I continued to learn as much as I could, I chose to specialize in rich media. Rich media is advertising that utilizes advanced web features. To me, rich media was the closest avenue to the print advertising that I loved growing up. Employing large format pictures along with advanced web features, like photo galleries and video. Because of the use of these features, movie & video game advertisers naturally gravitated toward rich media. To get to work on these types of campaigns became an exciting prospect. Rich media allowed me to gain a working insight into multiple hard skills, like engineering and design.

As a part of working in advertising, it was a necessity that we were ourselves creative, especially in rich media. Advertisers demanded that we innovate on technologies like mobile, as well as provide fresh ideas for execution. In every brainstorm for a particular product, I pushed myself to employ my background to think of unique interactions that would provide either a level of fun or usefulness to the user. The point was to provide an additive experience for the user. I wanted to fight the notion that advertising was always negative for a web user’s experience. The ideas from brainstorms even included a beautifully designed multi-player game displayed on an LED Billboard in Times Square; which connected to smartphones and tablets via web technologies. Two users would compete using cartoon cats and dogs along with other furry creatures to unravel a digital toilet paper roll before their opponent did. It was a simple bit of branding for a paper product company, but very complex on the technical execution side, the likes of which really drove home how far web technologies had progressed in the few years since the start of my career.

As far as technology had progressed, it was still apparent that we were a long way from The Fifth Element or The Jetsons, where the promise of a future filled with flying cars had not yet become our reality. Starting in 2017, this may finally be changing. Lighter materials and the budding drone industry has enabled no less than six companies to begin building prototypes for the future of flying cars. The goal is to build flying cars that can pilot themselves and fit inside an average garage. Among these companies, AeroMobil is planning on launching their version in 2017. Estimated cost is pegged at $400,000 +, not exactly an impulse purchase. Unless you’re a tech billionaire. Hell, even one of the cofounders of Google - Larry Page owns two different flying car companies. It’s just too bad average joes need to be in possession of a Grays Sports Almanac covering the winners from a 50 year time period to be able to afford it.

Fig 1-12. - Your reaction when you saw the $400K price tag.

The democratization of technology refers to the trend by which a singular technology or development becomes rapidly and widely available to any with an interest and the means.

Sounds great... what does it really mean? Typically in the past, trade guilds and academics would have the access to all the latest tools and technologies of the day. This makes sense because the cost of producing any tools would have been prohibitively expensive due to their innately mechanical structure, especially crafting tools that required knowledge of mathematics or some form of handed down skill. A Renaissance era example of this being a Mariner’s Astrolabe, which uses the noon day sun to determine the latitude of the user’s location while sailing open seas. It’s fairly easy to see the widespread use of a device like this could contribute greatly to the endeavour of trade, thus creating economic expansion and prosperity.

Fig 1-13. - The Renaissance consisted of a 200 year long game of ingesting MDMA and playing “feel my fabric, yo.”

In 1440, Johannes Gutenberg invented the printing press and changed the world as we knew it. Most of you

will remember this from school. What you might not know, is that the legacy of the printing press is still in

play

to this very day and will continue to be well into the future. The printing press, of course, allowed the

transference of ideas via (for the first time) mass media. No longer would monks or priests spend hour upon hour

writing calligraphy to create astoundingly beautiful but labor intensive texts. As more and more people gained

access to these tools, their ability to contribute to society economically and artistically rose dramatically.

Prior to this, ideas were transferred orally, or if you had money to pay people to handwrite each book. Ideas

were

static and worst of all, geographically locked.

Additional methods of reproduction stretched over time into more illustrative forms with lithography. Lithography is the process of transferring an etching to a sheet of paper. Imagery at the time could be more impactful than printed books due to rampant illiteracy, lithography provided perspective to a much wider audience. Philosopher Walter Benjamin noted that “lithography enabled graphic art to illustrate everyday life.”7 This self expression became culturally impactful because people across the world could communicate simply about the conditions of their lives, emotions and desires. The recognition of similarities across geographically separate locations became the stepping stones to the community based internet. The subsequent economic growth garnered a cultural exchange as well further leading to more technological advances.

Today, digital media authoring tools are increasingly available to anyone with an internet connection. As soon as an idea is born in the digital world, it is then able to leverage software to be birthed onto a more expansive digital world. These self expressions can instantly be available for anyone in the world to view at the moment of publication. We know from the recent political revolution in Egypt that the immediacy of technologies like Twitter have the ability to organize groups of people for a single purpose. The power of this cannot be understated. With the advent of each new engine of expression, more and more individuals are provided with a voice. With these technologies, it is plain to see that they have a large role in the further development of societies. As billions and billions of people in developing countries arrive online and find drivers of creativity and function at their fingertips, what will they have to say?

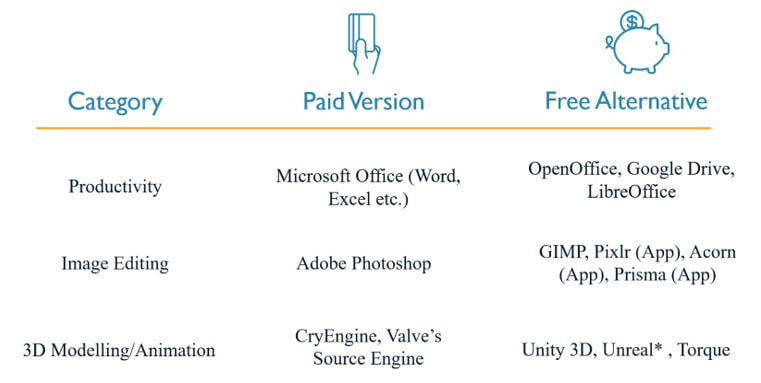

Services like word processors, image editors, and 3D animation software are all now available for free, 24 hours a day. More and more, people are educating themselves with these tools. They then produce passion projects that may or may not find an audience. The point is, the user has been empowered via the democratization of technology.

Fig 1-14. - Johannes Gutenberg. Blacksmith, Goldsmith, inventor of both the Printing Press and Pig-tailed beard.

Files like documents or videos consist of bits, bytes etc. and are infinitely reproducible since they are digital. Going from one file to two is just a copy/paste away!

Fig 1-15. - You can copy, any file - video, images, spreadsheets. If the file is digital, it can be copied.

Protip: In any browser window, word document, PDF (most anyways) it’s possible to search the document for a single word or phrase via shortcuts. Shortcuts, when pressed in succession enable additional functionality.

Windows: Ctrl + F Mac: Cmd + F

Then search for your desired word or phrase and add quotation marks for exact matches. This can help you easily navigate large documents like contracts.

Server - A type of computer that stores resources. Resources can include ‘files’ (a picture of a cute kitten, sometimes flapjacks) or a ‘service’ (Netflix, Hulu) which streams those resources to other computers or devices.

Fig 1-16. - A video file can be stored geographically closer to your area to speed up access. It sure beats transferring the whole series of Breaking Bad (many, many gigabytes) from New York to San Francisco!

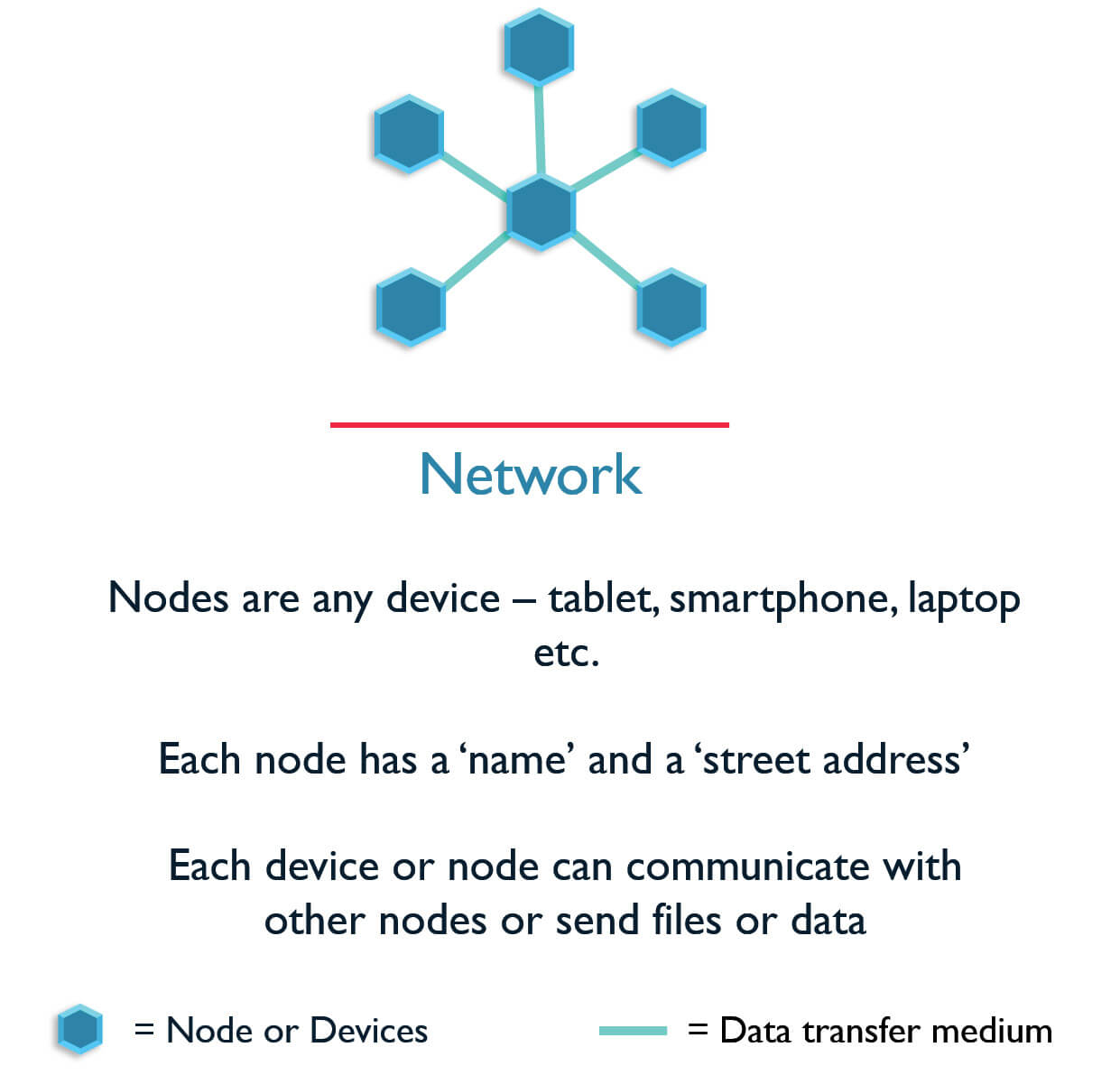

Network - A group of devices or computers that are enabled to communicate between each other. Within networking ‘nodes’ refer to devices which can be anything from a smartphone to a laptop or even a single temperature sensor monitoring a plant. As we do in society, each node is supplied a name and a street address. The node’s street address can change just like real life and come in the form of an IP address, IP is an acronym for Internet Protocol. The static mac address and IMEI number for tablets and smartphones identify devices that interface with a network, this is unchangeable like your name.

Fig 1-17. - Networks can consist of as little as two devices.

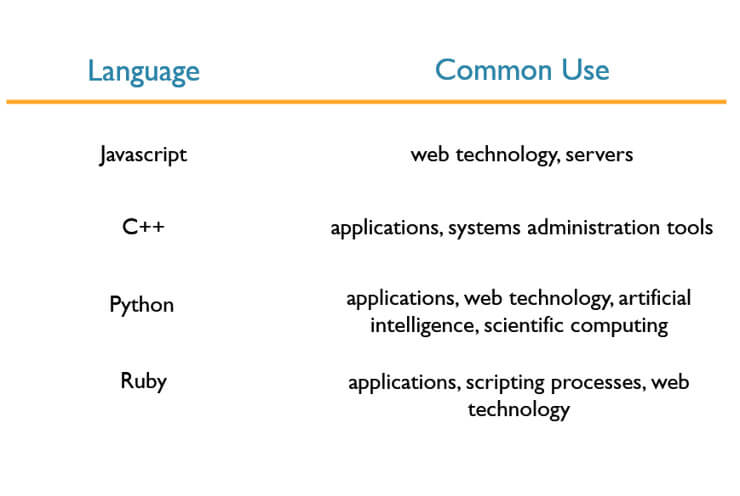

Like the world of humans, the world of computers communicate using a variety of languages. Like the spoken languages, coding languages have variations similar to dialects and accents. Some are intended for generating graphics, others are meant to quickly crunch numbers. So code is a noun, yet also a verb. As in “I’ve got to code a javascript-based front end.” or “The code is ready for review.”

Additionally, these coding languages need an interpreter of some sort, reading the commands written in code and then executing them on the screen. In terms of the web, this interpreter would be a browser like Google Chrome or Firefox. Each browser has strengths and weaknesses in their performance and their use is based entirely on your needs as a user. Some languages organize around object-oriented programming while others revolve around actions or animations.

Again, similarly to human languages, coding languages have differing uses and can also all be used together based on the end goal of the project. For example, you would not use an animation language like Adobe’s Flash to maintain a large set of database records.

Further examples:

Fig 1-18. - There are many types of languages and each has a community of developers sharing skills and code.

In this edition of “Ok, maybe we’re living in the future” we’ll review the state of technology in fashion. Back to the Future 2 certainly laid an odd template for what people would be wearing in the future. Though not all causes are lost, as we’ll find out there are some unique fashion trends around the corner that will help connect the dots between literal Helmet hair (see the butthead below) and clothing as a tech product.

Fig 1-19. - Anger is a fishnet shirt with no tech features.

For every technology, there can be an improvement to the fundamental science and application of its purpose. This can be expressed through the discipline of design. However, sometimes when design merges fashion and technology, the results can be disastrous. Though as we’ll see, if fashion considers user experience when merging with technology, the results can be something pretty special. The digital and physical realms collide in the form of wearable devices which fuel the development of a ‘quantified self’. The quantified self, is the idea that our physical actions can be measured and aggregated to create insights into our daily life. This has arrived in the form of personal health tracking, by wearing products like the FitBit, users are able to track steps taken, calories burned, heart rate, etc. The intent is to inform ourselves about our true behaviors, opposed to self-reported behavior, so we may modulate our actions to meet our goals. Design plays a pivotal role in the advancement of wearable technology, because if user preferences are not taken into account the results can be awkward to the point of ensuring failure. Without a streamlined introduction into the marketplace, emerging tech and fashion products can can stumble, hard. A prime example of the challenge of marketing wearables, is the release and public failure of the product Google Glass.

Fig 1-20. - An example of trying to make ‘fetch’ happen.

Google Glass is a device that projects the graphical user interface (GUI) elements, like buttons and images, as an overlay on top of your vision and is controlled via the user’s voice. Interface elements can include items like email notifications, video chat, and turn-by-turn directions. As a geek, this product was a no brainer. In practice, users stuck out like a sore thumb, and on more than one occasion had been accused of being “Glassholes.” It’s funny that people can hold smartphones in their hands all day, but put that technology on your face and people freak out. Urban Dictionary defines “Glasshole” as ‘a person who constantly talks to their Google Glass, ignoring the outside world.’ Given that voice controls can be misinterpreted in public settings, it’s pretty easy to see how people could become impatient with Glass users. People were annoyed at the possibility of being recorded in public or private events. Society had such a visceral rejection of Glass that the product was discontinued for the public and redirected toward industrial applications. A step back for futuristic metallic clothing of the future for sure.

The future of fashion involves adding sensors and intelligence to our everyday clothing. For example, Microsoft has patented a “mood shirt” that senses your mood, via body temperature, heart rate and other sensors, and reacts appropriately. Say you walk into a room full of strangers, if you’ve ever experienced anxiety in this situation, this shirt can stimulate your body by applying pressure, simulating a hug. This allows your brain to relax in a seemingly tense situation. Further examples of tech enabled clothing include:

Project Jacquard

Smart Athletic Apparel

No Cow Leather

The ongoing study and development of computer science has allowed for complex software to be designed and created. Over time, software gets upgraded, new bells and whistles are added. What used to be an advanced feature becomes commonplace. As a result, we have many pieces of software which can act as a working alternative to costly commercial software. Some businesses do not charge for a free account but can charge for extended features. Most businesses can be run and maintained off of freely available software.

Fig 1-21. - In the digital age, there’s a tool for every need. *Unreal only charges a capped amount based on the amount of software sold.

This book, by the very nature of its content, will challenge many of our presently held beliefs about our day-to-day world. Consequences and benefits to the whole of society and across the world are at play here. As we already know, the actions of a single nation may have sweeping consequences for another. We owe it to ourselves and our children to engage in these difficult conversations. We need to ensure that the world they inherit is one we can all be proud of, one representative of our time’s hopes, rather than our fears. Though I am optimistic, my own feelings are subject to change regarding tech. Not even I know how I will feel about the technologies of tomorrow as we explore their use and potential mis-use.

The differences in outcomes if we act upon our hopes as opposed to our fears, could be compared to the worlds of Star Trek versus Star Wars. Sure, wielding the lightsabers would be totally sweet, until the dominating evil empire destroys your entire home planet like it was nothing.

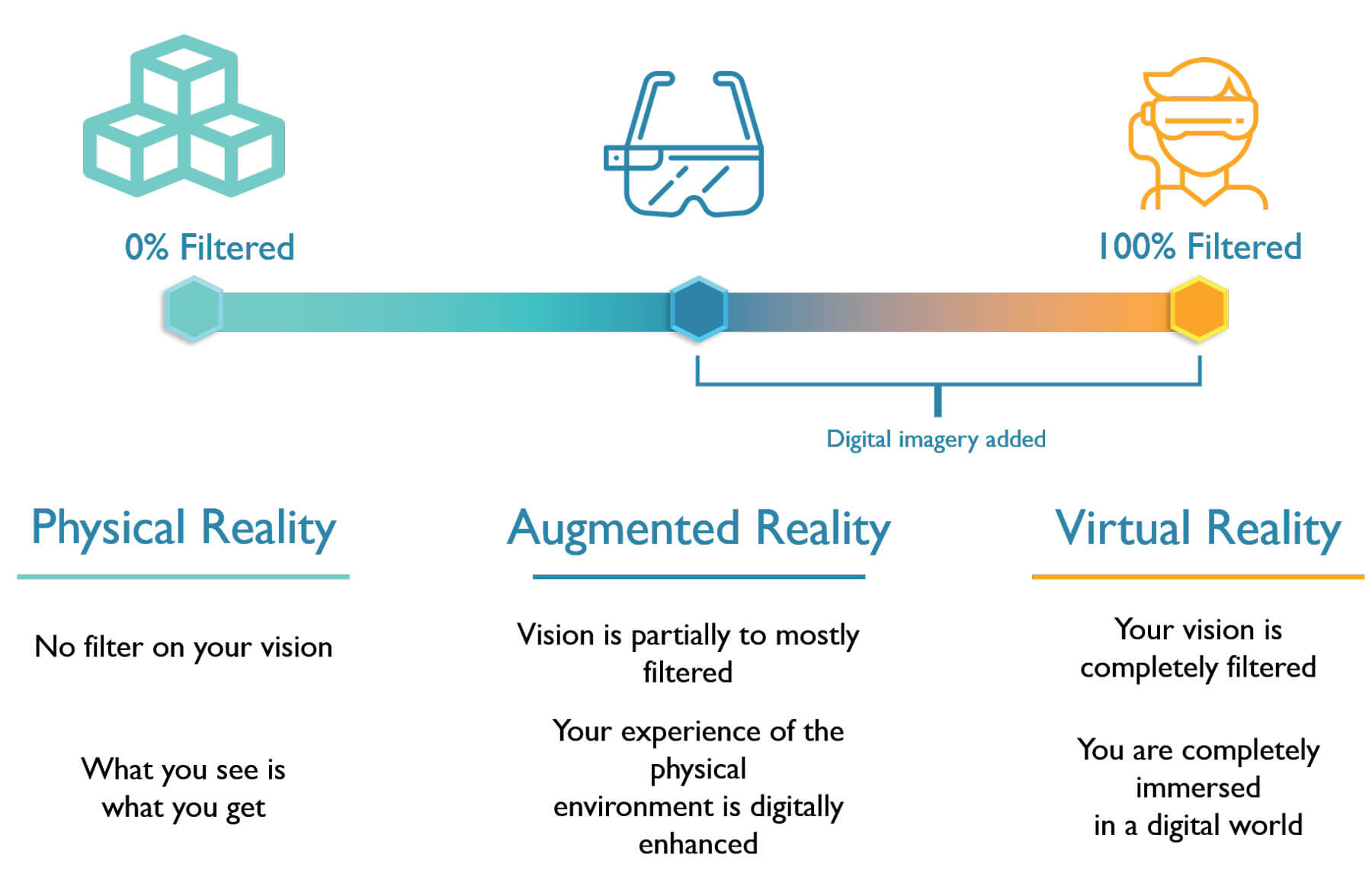

Speaking of dominating societies, Nintendo recently released the Smartphone version of their hit game Pokemon. Titled Pokemon Go, they have sown the seeds for augmented reality to become a mainstream reality. Simply put - Augmented reality combines a smartphone’s camera and overlays digital imagery to filter your vision. Enabling you, the user, to view images through your smartphone that are not physically there. In Pokemon Go, users explore the real world in search of all-digital Pokemon to capture and train. Pokemon can be residing in parks, forests, parking lots, local landmarks, etc. Any landmark in real life can have an augmented reality version, in the instance of Pokemon Go, any tangible location can serve as an alternate location within the game. The game stands presently as a massive hit, with an estimated 9.55 million daily active users,8 sending the stock price of Nintendo soaring 70% in the first week after release. The randomized appearance of a rare Pokemon has drawn crowds of several hundred people to storm a singular location.9

In this case, the augmented reality filter is supplied via the Pokemon. When attempting capture of Pokemon, the app will activate the camera and open a viewfinder as if you were taking a picture. The Pokemon will hop around in your phone’s presentation of a physical environment until you capture them. Given that Pokemon are generally small, around the size of a rabbit and up, your vision may only be filtered by 10%. While if you were wearing a virtual reality headset, your vision would be 100% filtered.

Fig 1-22. - Gamers have been anxiously waiting VR as long as I can remember.

Being a gamer all my life I’ve been privy to understanding the gaming perspective as well as more naturalistic ‘get outside and play’ sentiments. Through my entire childhood, society and parents have pressured kids with great fervor to ‘get outside!’ Now that Pokemon Go has been released, those same people are now belligerently shouting at these kids to ‘get inside!’ Which is it society? Quit being such a grump! Perhaps it’s not all bad for gaming, Pokemon Go has inspired a headmaster in Belgium to create an app based off of the hit game. In Aveline Gregoire’s version users learn about books hidden around a town by other users on a Facebook group called Book Hunters which has already attracted over 40,000 users in just a few weeks.10

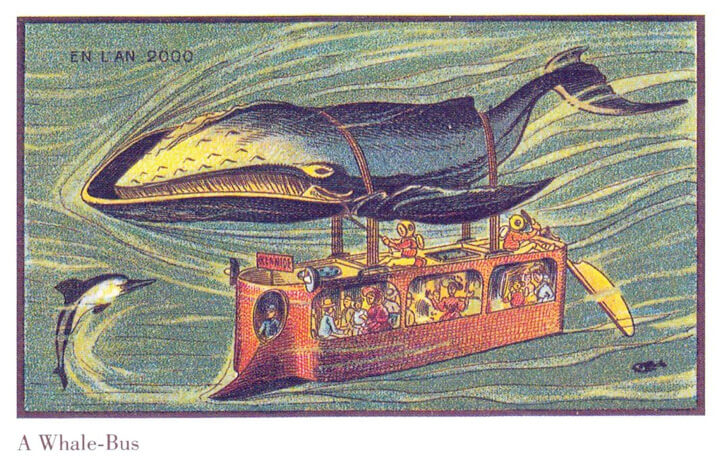

While Pokemon Go is a personal experience, there are companies out there bringing augmented reality to large groups for shared experiences. In a demo that has captured the funding of venture capital and attention of technology enthusiasts everywhere is the whale demo from Magic Leap. Self described as a developer of novel human computing interfaces and software, Magic Leap is aiming to be the next evolution in computing interfaces. In the demo, a gymnasium is filled with students when suddenly a full scale humpback whale bursts from the wooden floor and splashed down across the gym. The secretive startup Magic Leap has garnered over $840 million dollars in funding from the likes of Google, and Alibaba to bring augmented reality interfaces and experiences to the masses.11 This level of funding enables companies to rapidly enter a market with massive amounts of fanfare. If you haven’t heard of Magic Leap before, by the end of 2017 you will be aware of it, and likely a big fan.

Fig 1-23. - High school is about to get more interesting

In the example above, it’s important to note that these projections will require the use of a headset that may or may not look Google Glass levels of silly. Imagining a gymnasium full of kids all wearing headsets to become educated sounds like some dystopian future. I make this pledge to you dear reader, we will go investigate this process together.

If ever you should need a reminder of our shared experience, know that we are in this journey together. All of us. It is my assertion that the tactical application of technology has the ability to change the status quo for the betterment of all people. Without taking the time to reflect on where progress leads we run the risk of inadvertently creating technologies that ultimately cause more harm than good. My way of stepping up to that challenge is to help as many people as possible objectively gain a general understanding of the pros and cons of technology. Undoubtedly, the more people understand the workings and machinations of technology, the better we will be able to make pragmatic and inclusive decisions about our shared future. People need to be responsible for their property, in the physical sense, as well as the digital. I’m aiming to contribute to a conversation larger than myself, and positively influence our shared future. Public discourse on technology policy shouldn’t be a food fight that ends when every last cupcake has been thrown, gauging who won by seeing what has stuck to the walls. We need to work together to fill in the widening gaps in society - technology represents one of those yawning gaps. Only then will we begin to be able steer the direction our future toward positive outcomes. There is no opting out of tomorrow, short of removing yourself from society. This book is my double down bet that modestly adding my voice to the many others will support a future that produces our best wishes for our children.

Technology can appear a lot like a dark forest, but we can steer how bright it is. There is no one that is going to build the future for us- it’s up to us as individuals to step up and contribute. The hoverboard didn’t become a reality by the will of a single person. It took all of those talented engineers and designers, to make it happen. Given the incredible pace at which technology has advanced, it is apparent that the rate of change will continue to accelerate. Never in the history of us, have we been on the precipice of where we are now. Doc Brown would have never investigated time travel if he had listened to the critics. What this means for you, dear reader, is this - strap in, because where we’re going, we don’t need roads...

This entire experience is nothing, if not a learning experience. Let’s just run with it, I’ll be your wide-eyed Doc Brown if you’ll be my mostly-willing Marty. What do ya say? In all of my years of enthusiastically examining technology, here’s the prevailing advice, like any new city you visit, it’s best to have a guide. You have a friend and guide here in me, assisting you along in your education. Growth can most often be expressed when we exit our comfort zones to experience something new. Freedom is often, in my opinion, misattributed to the realm of physical freedom or freedom from bondage. I’d contend that a more useful portrayal as the recognition and appropriate reaction to obstacles as they lay in our path. This type of cerebral-freedom is the sum of what our physical senses report back to the brain, informing an ongoing understanding of the environment. Not to be too cheeky, but right now it begins with perceiving the words on the page and so moving into perception!

Right - so what is perception, exactly? We humans feature five unique senses, the senses each operate within their unique area of expertise and all report back to the brain. There is a huge energy requirement involved in sense to brain perception, let alone with five unique senses. As a means to efficiency, the brain provides us shortcuts to processing these environmental stimulus, or input. The signals are then converted into chemical data signals, not entirely unlike data used in devices. The brain will interpret, in conjunction all of our senses, the environment like piecing together a jigsaw puzzle. The messages from the senses flow into the brain and solve the puzzle as best it can, although pieces may be missing.

These messages can be flared by our senses ala flushing our system adrenaline at the first sign of predatory danger. It allows us to react as quickly as possible upon our brains registering sight of a predator. It’s similar to keeping a shortcut on your desktop or home screen, there for quick and easy access. See a predator and the brain instantly clicks ‘GTFO.exe’ and for the younger people reading that would be tapping the YOLO emoticon on your smartphones. Our bodies kick our asses into gear and send us running as fast as our monkey legs will carry us. Our continued survival was enabled, thanks to biological biases known as ‘gut instincts’ and reactions. As contemporary humans, we still rely on much of these instinctive reactions that arose from our early evolutionary history, to inform our present worldview. Potential issues may arise when we apply instinctive understanding where our understanding is swimming in deep water.

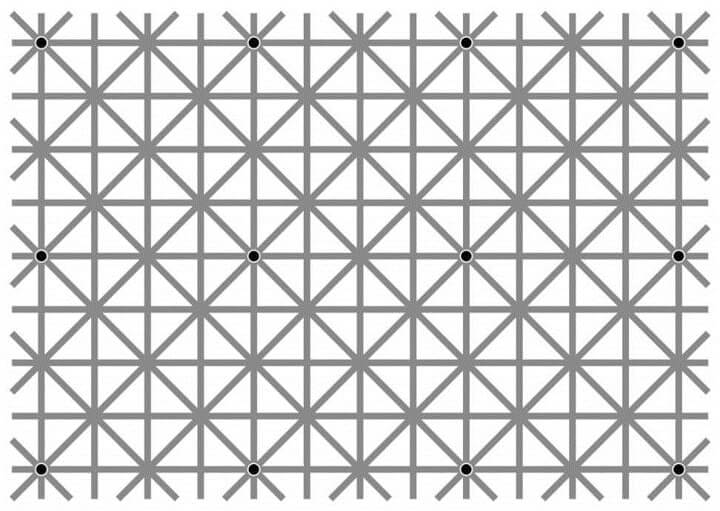

Functioning as verbal guideposts, passing on proverbs became a way to infuse daily living with evolutionary wisdom. ‘The grass is always greener on the other side of the fence’, or ‘Look before you leap,’ or even ‘Good things come to those who wait.’ These shared wisdoms transferred between people because they were both A) catchy and B) provided additional wisdom or insight to commonly arising issues. We find them helpful because our brains can only make decisions with the inputs available, which often times does not encompass the whole truth. The input of the proverb, helps us to maintain status quo or improve it. This is the essence of input/output. We are, after all, human, and these deficiencies in our processing arrive for natural reasons. It is simply impossible for humans to know the full spectrum of information about all topics. Thus processing of inputs can contrast reality or become lost in translation. A recent example of a variation in processing, includes the black/blue, white/gold dress debacle more commonly recognized as the day the internet’s collective mind was blown. Each person’s perception is demonstrably different.

Fig 2-1. - Black and Blue or Gold and White? Our eyes offer differing reports.

Within the persistent motion of our day-to-day environment, our active brains tend to not have the luxury of time, to analyze the minute detail of every situation. Moments of our days can stand out for one reason or another and may receive additional inspection. Otherwise, we take care of what we have to take care of on a daily basis. In the practice of decision making, our brains interpret the environmental inputs accessible, reference any memory or emotions concerning the topic and thus leap to a conclusion. The conclusion may be arrived at with seemingly impeccable recollection, however this may not always be the case.

Here’s an at home experiment, for science! Pour assorted jelly beans into a dish and don’t look while you pick one, plug your nose and pop the jelly bean in your mouth without looking. Keep your nose plugged and chew, you’re likely not able to determine the flavor of jelly bean. This is another example of how our senses are filtered through an interpretation of the environment. Similarly to the way augmented reality and virtual reality filter vision, our senses can also be filtered. This phenomenon can be explained via the visual tricks of the eye, Magic Eye from the 90’s, 3D shapes materialize when the viewer relaxes their eyes while staring at the swirls of colors.2

In the previous chapter, we covered:

Defined bits, pixels

Defined server and network

Reviewed the differences between hardware and software

Examined the variations of websites and online communities

Developed the computing concepts of copy and paste

Described some, but not all coding languages

In this chapter, we’ll answer the following questions:

What is cognitive science?

What are cognitive biases?

What is cognitive dissonance?

How does neuroplasticity inform my ongoing development?

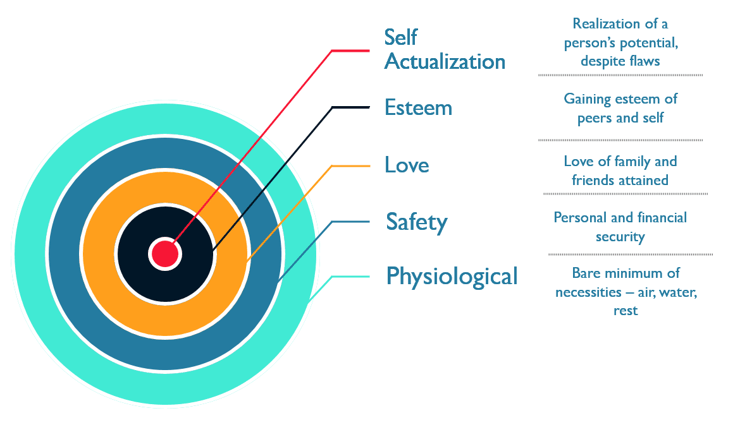

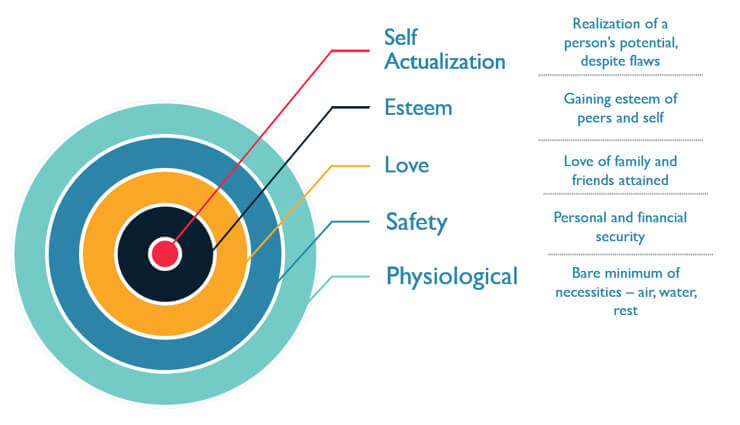

What is Maslow’s theory of motivation?

How can I develop a pragmatic framework in understanding and applying technology?

So we recognize that our senses evolved to require a partner in the brain, enabling processing of environmental stimuli and developing meaning in the process. Evolutionary Psychologists John Tooby and Leda Cosmides posit that our brains are, to this day, wired for a world that embodied brutal and short lives. They articulate “... our modern skulls house a stone age mind. The key to understanding how the modern mind works is to realize that its circuits were not designed to solve the day-to-day problems of a modern American -- they were designed to solve the day-to-day problems of our hunter-gatherer ancestors.”3

It’s as plain to see as a white bread and mayonnaise sandwich, projected through time, our brain’s functions were not dialed into dealing with the decisions required to live in our present age. Let alone dealing with how crazy it will get in the near future. If we were to drop one of our ancestors - homo-habilis off at a froyo stand, their minds would be blown. If we plunked them into a corporate environment, they’d likely tear the place apart. Tooby and Cosmides proceed to claim this is because natural selection of genetic traits plays out over many generations. An example of being that our brains were programmed to crave foods that yielded nutritional sustenance. Fruit smells sweet, rotten fruit smells bad. Dopamine, a pleasure stimulating signal is released by our body when we smell the sweet fruit.

It may seems simple to us now but it’s easy to imagine homo-habilis growing thorny about not ever owning a refrigerator. That’s part of the problem, adjustments for the comfort we’ve developed for ourselves still have not caught up. We are hardwired for a different mode of living, our brains might dominantly prefer to live with simple wisdoms as guideposts via proverbs. Our early ancestors occupied a world full of danger from predators as well as their own people. This caused their brains to interpret the world with a survivalist’s perception, filtering out unnecessary details in the name of remaining, not dead. Meanwhile many of our present selves are kicking back and enjoying the wonders of air conditioning and heavily debating “What’s for dinner?” I believe it is plain to see our brains are not as well suited to navigating today’s technology environment for these same reasons.

In reframing our understanding of bias, we must first highlight how we apply information to decisions. We can demonstrate pre-existing biases by performing a quick exercise - imagine a fully grown stick-figure drawn on a sheet of paper. I’ll call him, Stanley Stickerson for clarity. Now, please give Stanley Stickerson a shield to fend off tribes of fire-hardened-stick brandishing tribe-guys. Those other tribe-guys are total jackasses but we’re protected if our Stanley is equipped with a shield. Feel free to imagine throwing it to him while dishing out a witty one-liner. If you’re unable to think of a one liner, jazz hands would likewise be acceptable. Back to stickfigure Stanley, depending on your own biases, you imagined the shield occupying the stick figures left or right hand. Which was it? Chances are, Stanley is holding the shield in the hand you yourself are dominant in using. Hergo, a simple example of a bias affecting decision making.

Our biases represent natural processes and there’s not much intuitively wrong with acting in our accordance with them. In fact, our bodies respond to environmental stimuli via chemical messages such as serotonin and dopamine. They act as reward mechanisms for our nervous systems. We can employ our knowledge of their existence as an opportunity to view things from a slightly different angle, a cognitive adjustment. The definition of cognition can be defined as the processes by which our brain engages in perception reasoning, judgement, and memory. When we lack information about a topic calculating a decision can be portrayed as a half painted picture, or a meme without a caption. Perceiving our behavior requires consideration of a broader approach. Cognitive science fits that bill due to its interdisciplinary approach including linguistics, neuroscience, artificial intelligence, philosophy, anthropology, and psychology. Cognitive science is basically the Captain Planet of sciences. With their powers combined -these disciplines contribute findings and research to the others in a synergistic exchange of insight. When mixed together in a pot, we have the discipline of cognitive science!

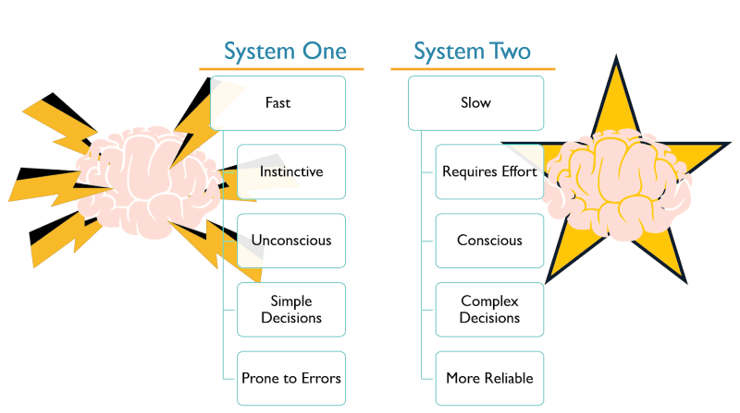

Given that biases exist in our basic interpretation of the world, they impact our engagement with not only technology, but also our environments. Decision making, of course, begins with defining the problem. Daniel Kahneman, Nobel laureate and bestselling author of Thinking, Fast and Slow describes two distinct systems within decision making. The first system being intuitive thinking, providing us with a rapid estimation of what’s coming next, while the second is more carefully considered and deliberate, allowing your brain to make long term decisions.4

Fig 2-2. - Each system of decision making has benefits and drawbacks.

When making any decision we’re able to apply one or the other of these two systems. Given the amount of decisions any person engages in on any day, we’re looking at a ton of opportunities to jump to conclusions prematurely. which have varying effects on the outcome. Kahneman posits that “when information is scarce, which is a common occurrence, system one operates as a mechanism for jumping to conclusions.” This is particularly common in people’s grasp of technology. Information floats around, but in many cases there appears to be no clear avenues to attain further understanding. When making decisions, both systems possess their own benefits and consequences. Yet, in both systems a wide variety of cognitive biases can sway our thinking, one way or the other.

Cognitive biases can be thought of as the electrical wiring supplying electricity to the lights in your home, they exist at all times in all rooms, though they may not be turned on at all times. When making a decision about what to think about a particular topic, the brain recognizes a series of patterns which corresponds to a specific ‘light’ in the brain. The brain, seeking to save energy prefers to take mental shortcuts. These cognitive biases enable people to rapidly determine their own personal frame of reference on a given problem or topic. This could be anything from what to eat for dinner, to what protocols need to be in place to ensure personal privacy on the web. Similarly to cooking from a recipe, if you lack an ingredient you can still cook your meal. It just might not be the same outcome, have you ever tried to eat flapjack with no maple syrup? Might as well eat cereal with water - it’s savagery!

Researchers at the Max Planck Institute have discovered that indeed, the brain contains pre-wired areas of the brain that make a decision before our conscious had an active chance to decide. Sorta like the way when I ask you to recall the music of Star Wars or Jaws, you instantly recognize it - you know it by heart. The researchers stated that, “many processes in the brain occur automatically and without involvement of our consciousness. This prevents our mind from becoming overloaded by simple routine tasks. But when it comes to decisions we tend to assume they are made by our conscious mind. This is questioned by our current findings.”5 The brain’s decision for us, all happens up to seven whole seconds before we realize we’ve made a decision. I believe this behavior makes a big splashy appearance in the form of system one decision making spurred by cognitive biases that steers our understanding away from technology. The lightswitch flicks ‘on’ and a decision is leapt to for us, before we even recognize it.

Children from the 90s will no doubt recall blowing air into Nintendo cartridges, in this example, system one dominated the decision making process. Had we taken the time to count how many times blowing worked as opposed to not, we would have realized how ineffective it truly was. It did not stop kids from sharing the secret as a holy potion for resolving our gaming woes. As a result, most kids from the era recall using any method short of voodoo in attempting to play video games. Little did us kids realize, the real reason behind the faulty cartridges was simply poor contact between the cartridge and the slot. Correctly or not, these biases affected our decisions, by filtering our perception with the evolutionary imperative of preserving energy. Spread across our lives, this tradeoff thereby alters the very trajectory of our lives.

Our brains developed the ‘fight or flight’ imperative to rapidly react to danger within our environment. Visually scanning a landscape, we’re built to prioritize the bad news first, recognizing clues to the presence of a predatory threat immediately standing out. A majority of the technology world exists as invisible infrastructure, we can’t smell it, taste it, and in many instances, we can’t touch it. Our inability to perceive technology past a screen, despite all of our evolved senses, is emblematic of technology’s complex nature. With unknown inputs and outputs, the complexity can make us a bit resistant to pursuing a further self-education in technology.